Latency and System Design

Unpacking Different Latencies for Informed Engineering

Many JavaScript frameworks ago (in 2009), Jeffrey Dean presented the famous “Numbers Everyone Should Know” during an engineering all-hands meeting at Google. The list looked something like this:

These numbers gained traction within the engineering community, as they effectively highlighted the magnitude of latency differences across various types of operations. Peter Norvig also references these numbers in his essay, “Teach Yourself Programming in Ten Years”.

Although these numbers continue to be shared and discussed in engineering articles and communities today, there is often a lack of deeper insights into how different latencies can impact the systems being developed and the design decisions they inform. In this article, we will try to explore these areas.

Grasping the Scale

When measurement units are far removed from everyday life, it can be challenging to understand the true differences in magnitude. It's one thing to know these numbers factually, but developing an intuitive sense of their significance is a different matter. A helpful approach is to rescale these operations for a more relatable comparison. For example, consider the operation "Fetch from L1 cache memory," which takes 0.5 nanoseconds. If we scale this operation to represent 1 second for comparison purposes, the relative differences across other operations would look like this:

Now that we understand the differences in the magnitudes of these operations, let’s explore how we can use this information to our advantage. We'll start with how high-performance systems leverage modern CPU architectures.

High-Performance Systems

Modern CPUs use sophisticated mechanisms to improve performance. The CPU cache hierarchy forms the foundation of this interaction, bridging the vast speed difference between fast processors and relatively slow memory, so we will start with it.

CPU Caches Overview

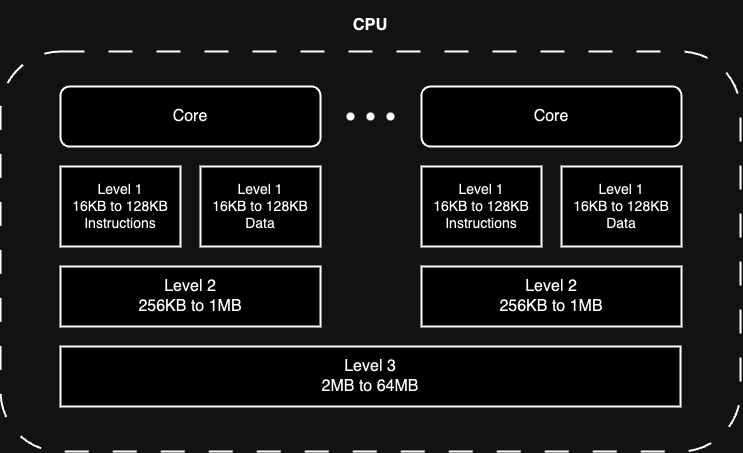

The simplest way to visualize the CPU and its cache hierarchy is as follows:

Level 1 Cache:

Location: Closest to the CPU core, integrated directly.

Speed: Fastest but smallest (16–128 KB per core).

Purpose: Stores frequently accessed data and instructions in separate caches (L1-D for data, L1-I for instructions) to minimize latency (~0.5 ns).

Access: Dedicated to each core.

Level 2 Cache:

Location: On the processor die, slightly farther from the core.

Speed: Slower but larger (256 KB–1 MB per core).

Purpose: Backup to L1, holding more data/instructions (~3–10 ns latency).

Access: Usually dedicated per core, occasionally shared.

Level 3 Cache (not in the original “Numbers Everyone Should Know” list):

Location: Furthest, shared within the CPU package.

Speed: Slower than L2 but faster than main memory (2–64 MB).

Purpose: Shared storage for coordinating multi-core operations (~10–30 ns latency).

Access: Shared across all cores.

Temporal and Spatial Locality

At first glance, it might seem counterintuitive for CPUs to rely on multiple cache levels, as fetching data and filling up the cache adds overhead to executing operations. However, the usefulness of caches lies in two key phenomena observed when analyzing how programs access memory: temporal and spatial locality.

Temporal Locality: Code and data that have been accessed are likely to be accessed again.

Spatial Locality: Memory locations near recently accessed code and data are also likely to be accessed soon.

These principles allow CPUs to make smart predictions about what data will be needed next. To leverage spatial locality, the CPU fetches data from main memory in chunks called cache lines. A cache line is a small, fixed-size block of memory (typically 64 bytes) that represents the smallest unit of data transfer between the CPU cache and main memory. Even if the CPU requests just one byte of data, an entire cache line containing that byte and its neighbouring data is loaded into the cache. This ensures that nearby data is readily available if needed.

For temporal locality, the CPU employs sophisticated cache replacement policies, keeping frequently and recently used data in faster cache levels while pushing less frequently accessed data to slower levels or memory. This creates a natural hierarchy where the most commonly accessed data stays closest to the CPU.

Although an entire program might eventually need all its data and instructions, you can think of memory access as a sliding window that moves across the program. The cache levels keep this "window" of currently needed data and instructions as close to the CPU as possible, reducing the time spent accessing slower main memory.

Cache Hits and Misses

When the CPU requests data, it first checks the cache. This is done by identifying the cache line using an index and comparing the tag to ensure the requested data is present. A cache hit occurs if the data is in the cache, allowing the CPU to access it quickly. A cache miss, however, means the data is not in the cache, requiring the CPU to fetch it from a slower source, such as main memory or the next cache level. This process can take hundreds of memory cycles, making misses expensive.

Branch Predictions

Modern CPUs use branch prediction to improve performance by guessing the outcome of conditional operations (e.g., if statements) before the actual result is known. This is necessary because CPUs execute instructions in pipelines, and waiting to determine the branch direction (e.g., which path to take) would stall the pipeline, wasting valuable cycles. A branch predictor anticipates the next instruction to execute, allowing the pipeline to stay full. If the prediction is correct, execution continues smoothly; if not, the CPU must discard the incorrectly guessed instructions and fetch the correct ones, causing a delay.

False Sharing

False sharing is a subtle performance issue that occurs in multi-threaded programs when different CPU cores write to variables that, while logically separate, happen to reside on the same cache line. When one core modifies its variable, it invalidates the entire cache line for all other cores, forcing them to reload the cache line even though they're accessing different variables. For example, if two threads frequently update different counters that are adjacent in memory, each update will invalidate the cache line for the other thread, causing unnecessary cache coherency traffic and performance degradation. This problem can be particularly insidious because the variables appear independent in the code, yet their physical proximity in memory creates contention. The solution typically involves padding the data structure to ensure frequently accessed variables reside on different cache lines, usually by aligning them to 64-byte boundaries.

SIMD (Single Instruction, Multiple Data)

SIMD is a parallel processing technique used in modern CPUs to perform the same operation on multiple pieces of data simultaneously. Instead of processing data sequentially, SIMD allows a single instruction to operate on multiple data elements stored in vectors or arrays. This is particularly useful for tasks like graphics rendering, image processing, and scientific computations where the same operation (e.g., addition or multiplication) is applied to large datasets. By leveraging SIMD, CPUs can achieve significant performance improvements for these workloads. Modern CPUs include SIMD instruction sets like Intel’s AVX or ARM’s NEON, making it an essential feature for optimizing performance in data-parallel tasks.

What All This Means When Engineering Systems

Modern CPUs are remarkably sophisticated, performing extensive automatic optimizations. Rather than manually optimizing code, our primary task is to write code in ways that let CPUs optimize effectively. This approach usually provides the best return on effort - achieving significant performance gains through simple, CPU-friendly patterns. Only when these automatic optimizations prove insufficient should we consider manual optimization, which requires careful weighing of implementation costs against performance benefits. Here's what this means in practice:

Cache Lines

Modern CPUs read memory in 64-byte (usually) chunks called cache lines. When you access any memory location, the entire cache line is fetched. This means struct layout matters - crossing cache line boundaries or causing false sharing between threads can significantly impact performance:

# cpp

// Poor: Struct likely crosses cache lines

// (76 bytes total)

struct DataPoint {

// 8 bytes

double value;

// 60 bytes

char metadata[60];

// 8 bytes, forces new cache line

double timestamp;

}; // Will occupy 2 cache lines

// Better: Cache-aligned structure

// (64 bytes total)

struct alignas(64) DataPoint {

// 8 bytes

double value;

// 8 bytes

double timestamp;

// 48 bytes to fill the cache line

char metadata[48];

}; // Will fit exactly in 1 cache lineMemory Access Patterns and Data Structures

CPUs excel at predicting and prefetching sequential memory accesses. When designing data structures, consider how you'll access the data. The classic example is the Structure of Arrays versus Array of Structures. For operations on single attributes, SoA often performs better:

# cpp

// AoS: Poor cache utilization for

// single-field operations

struct Particle {

// Position

float x, y, z;

// Velocity

float vx, vy, vz;

};

std::array<Particle, 1000> particles;

// SoA: Better cache utilization when

// processing single attributes

struct ParticleSystem {

// Positions

std::array<float, 1000> x, y, z;

// Velocities

std::array<float, 1000> vx, vy, vz;

};Linked lists, while offering flexible insertion and deletion, often perform poorly on modern hardware due to their scattered memory layout:

# cpp

// Linked lists: Poor cache utilization

// due to scattered memory layout.

// Each node could be anywhere in memory.

// No sequential access pattern for

// prefetcher to detect.

// Each access might trigger a new

// cache line load

struct Node {

int data;

// Pointer to next element scattered in memory

Node* next;

};Branch Predictions and Stalling

Modern CPUs have deep pipelines (15-20 stages) and can predict branch outcomes with impressive accuracy. However, unpredictable branches can stall the entire pipeline. When a prediction is wrong, the CPU must flush its pipeline - discarding all work done on the mispredicted path and reload the correct instructions, which can cost 10-20 cycles or more. Similar stalls occur when data dependencies prevent advance execution - for example, when traversing a linked list, the CPU cannot prefetch the next node's data until it loads the current node's 'next' pointer. This creates a chain of dependent memory loads that stalls the pipeline. When performance is critical, consider making branch patterns predictable or eliminating them entirely:

# cpp

// Predictable pattern - CPU can learn this

for(int i = 0; i < n; i++) {

if(i % 2 == 0) { ... }

}

// Unpredictable - CPU cannot learn this pattern

// network response can arrive at any time

for(int i = 0; i < n; i++) {

// Depends on external events

if(check_network_status()) {

process_data(i);

}

}

// Pipeline stall due to data dependency

while(current != nullptr) {

// CPU must wait for each 'next' load

process(current->data);

// Next address unknown until loaded

current = current->next;

}

// Branchless alternative for simple operations

int max(int a, int b) {

int diff = a - b;

// All 1s if negative, all 0s if positive

int mask = diff >> 31;

return b + (diff & ~mask);

}SIMD Auto-vectorization

Modern CPUs can automatically parallelize operations using SIMD instructions, but only when the code pattern allows it. The key is writing simple, predictable loops without complex branching or data dependencies:

# cpp

// CPU might auto-vectorize this

for (int i = 0; i < n; i++) {

c[i] = a[i] + b[i];

}

// Complex branching prevents auto-vectorization

for (int i = 0; i < n; i++) {

if (a[i] > 0)

c[i] = a[i] + b[i];

else

c[i] = a[i] - b[i];

}Synchronization and Lock Contention

When writing concurrent code, minimize synchronization overhead by keeping critical sections brief. While mutex operations are fast (~25ns), lock contention severely impacts performance as threads waste cycles waiting or get descheduled, with additional overhead from cache invalidation and context switches. Match thread counts to CPU cores for CPU-bound work (higher for I/O-bound). Consider alternatives: lock-free structures with atomics for throughput, read-write locks or RCU for read-heavy workloads, or fine-grained locking and striping to reduce contention.

Input-Output (I/O) Bound Systems

Various software systems face different performance challenges. While scientific computing and graphics engines push for computational efficiency, most everyday applications are constrained by data movement speed rather than processing power. Web servers, log processors, data pipelines, etc., are typically I/O-bound, where disk access, network latency, or database queries create bottlenecks. When a system is I/O-bound, the focus shifts to minimizing and optimizing data movement through techniques like caching frequently accessed data, batching multiple operations together, and using asynchronous operations to hide latency.

I/O Operations Overview

Storage I/O:

Medium: Data access through physical storage like Solid State Drives (SSDs) and Hard Disk Drives (HDDs).

Speeds:

NVMe SSDs: ~10-20 μs latency, 3-7 GB/s throughput.

SATA SSDs: ~50-100μs latency, 550 MB/s throughput.

HDDs (7200 RPM): ~9-10ms latency, 150-200 MB/s throughput.

HDDs (5400 RPM): ~12-15ms latency, 100-150 MB/s throughput.

Purpose: Persistent data storage and retrieval.

Characteristics: Sequential reads/writes are much faster than random access.

Network I/O:

Medium: Data transfer through networks (LAN, internet, cloud).

Speeds:

LAN: ~0.5-5 ms.

Internet/WAN: ~10-200 ms.

Cross-continental: ~200+ ms.

Purpose: Distributed data transfer.

Characteristics: Performance varies greatly based on network conditions and distance.

Buffering and Batching

The efficiency of I/O-bound systems relies heavily on two key principles: reducing the number of operations and maximizing the size of each operation. This is why buffering and batching are fundamental to I/O optimizations:

Buffer Size Impact:

Small buffers lead to many small I/O operations.

Large buffers reduce operation count but increase memory usage.

Optimal buffer size typically aligns with underlying system blocks (e.g., 4KB or 8KB).

Batch Processing Impact:

Amortizes per-operation overhead.

Enables better resource utilization.

Allows for operation coalescing.

What This Means When Engineering Systems

I/O operations are often the bottleneck in web applications, from network calls to making database queries. Below, we explore practical approaches to avoid common issues.

File Access Patterns

Modern file systems are optimized for sequential access and larger block sizes. When reading files line by line or in small chunks, each operation incurs overhead and potentially triggers a new disk operation. Consider log processing - reading a file line by line forces the system to perform thousands of small I/O operations instead of fewer, larger ones. A better approach uses buffered reads with larger chunks that align with the system's page size.

SSDs When Available

While buffered reads remain important, SSDs fundamentally change some traditional file system assumptions. Unlike HDDs, which suffer severe penalties for random access due to mechanical seek times, SSDs can perform random reads almost as quickly as sequential ones. This makes techniques like memory mapping particularly effective on SSDs. However, SSDs come with their own considerations - they have limited write cycles and perform best with aligned writes matching their internal page size (typically 4KB or 8KB). For systems handling intensive I/O workloads, using SSDs can provide 10-20x performance improvements, making them particularly valuable for applications where access patterns are less predictable and latency is critical.

Database Query Batching and Avoiding N+1 Issues

The infamous N+1 query problem is a classic example of poor I/O patterns in database operations. For example, when fetching a list of users and their orders, naive code might query once for users and then once per user for their orders, resulting in N+1 database roundtrips. Instead, proper query design uses joins/bulk loading:

# python

# N+1 problem: one query per user

users = db.query("SELECT * FROM users")

for user in users:

# Additional query for each user

orders = db.query(

f"SELECT * FROM orders WHERE user_id = {user.id}"

)

...

# Efficient: Single query with join

users_with_orders = db.query("""

SELECT users.*, orders.*

FROM users

LEFT JOIN orders ON users.id = orders.user_id

WHERE users.id IN (...)

""")The N+1 pattern's performance impact is deceptive. Even with a fast network roundtrip of just 5ms within the same datacenter, the overhead adds up quickly. For an application with 1,000 users, this means 5 seconds of pure network latency. Worse yet, this overhead grows linearly with your user base - double the users, double the delay. It’s a performance nightmare.

Eliminate Redundant I/O

A common mistake in machine learning services is persisting data that only needs temporary processing. For example, when building model prediction services, developers often unnecessarily save files to disk even though they only need the data briefly in memory. Consider this pattern with cloud storage:

# python

# Bad: Two I/O operations

def process_image(gcs_path):

# First I/O: Download from network to slow disk

local_path = "/tmp/image.jpg"

gcs.download_to_file(gcs_path, local_path)

# Second I/O: Read from disk into process memory

image = load_image(local_path)

return make_predictions(image)

# Better: Single I/O operation

def process_image(gcs_path):

# Load directly from network to process

# memory since we only need it temporarily

image_bytes = gcs.download_as_bytes(gcs_path)

image = load_image_from_bytes(image_bytes)

return make_predictions(image)This pattern creates unnecessary slow disk operations and latency - instead of loading data directly into memory, it adds an extra round trip to disk by temporarily storing and then re-reading the file. Always consider whether intermediate storage is truly necessary, as eliminating redundant I/O operations can significantly improve performance.

Concurrent Execution/Minimizing Network Round Trips

Network operations suffer when code treats remote calls like local function calls. Each HTTP request or RPC call incurs significant latency, and doing them sequentially multiplies the delay. Modern systems use batching and asynchronous operations to minimize round trips and hide latency:

# python

# Poor: Sequential network requests

for user_id in user_ids:

# Individual network call

user = await api.get_user(user_id)

process_user(user)

# Better: Either...

# Option 1: Single batched network request

# Single network call for all users

# if API allows

users = await api.get_users(user_ids)

for user in users:

process_user(user)

# Option 2: Concurrent network requests

# Concurrent execution of requests

users = await asyncio.gather(*[

api.get_user(id) for id in user_ids

])

for user in users:

process_user(user)Sequential remote calls can be a performance nightmare, just like the N+1 problem. When requests could be executed concurrently, drastically reducing system wait time, we process them one after another, forcing our system to wait needlessly. The solution comes in two forms: batching multiple requests into a single network call (when the API supports it) or executing multiple individual requests concurrently (when batching isn't available). Both approaches can dramatically reduce total system wait time compared to sequential execution.

Memory Mapping for Efficient File Access

For large files, traditional read operations copy data multiple times: from disk to kernel buffer, then to user space buffer. Memory mapping eliminates these copies by mapping file contents directly into memory space. This is particularly effective for large files that are read multiple times or accessed randomly:

# python

# Traditional: Multiple copies

with open('large_file.dat', 'rb') as f:

# Copies data to user space

data = f.read()

# Memory mapped: Zero-copy

with mmap.mmap('large_file.dat', 0, access=mmap.ACCESS_READ) as mm:

# Direct memory access

data = mm[offset:offset+length]Caching

Caching is crucial for performance optimizations, acting as a memory layer to store frequently accessed data. While it significantly reduces latency and server load, implementing caching requires careful consideration. Before building your own caching systems, consider using proven systems like Redis or Memcached, or your framework's built-in caching mechanisms. These handle complex scenarios like invalidation timing and data consistency that often become significant challenges in custom implementations.

Conclusions

Modern CPUs excel at optimizing code, but they need predictable patterns to do so effectively. The key to high performance is writing simple, clean code that aligns with how CPUs work. This means using data structures that fit neatly into CPU cache lines and follow natural memory access patterns. When you organize your code this way - an approach called data-oriented design - the CPU can automatically optimize much of your code. This technique is particularly popular in game development and high-performance computing. For a more detailed dive into data-oriented design, I would suggest reading Richard Fabian's book "Data-Oriented Design”.

For I/O-bound systems, optimizations revolve around minimizing data movement and reducing latency through strategic tradeoffs. This means leveraging memory mapping for large files, batching operations when possible, choosing appropriate storage media, and eliminating unnecessary data copies. Techniques like caching and concurrent execution can help greatly, but the fundamental goal should be reducing the total number and cost of I/O operations. Common crimes like N+1 queries and sequential API calls are so against these principles that any engineer who implements them should be sentenced to maintaining legacy COBOL systems until they learn the error of their ways.

Core principles remain similar whether optimizing for CPU or I/O performance. First understand your hardware's capabilities, then structure your code to work with these constraints rather than fight against them.